| Table of Contents | ||||

|---|---|---|---|---|

|

This page lists the errors and warnings you might encounter while using the CSV Importer. It includes those you might see in the application web interface, and those you might find in the CSV reports generated by the Processing and Transferring steps.

...

| Info |

|---|

About errors vs. warnings The meaning of error is different depending on where you see that term. On the batch processing step screens, an error means something is so wrong that the overall batch process failed or cannot be carried out. You will not be able to proceed to the next step of the import. On this screen, a warning means there is something you probably want to check before you proceed because it might indicate something unexpected and potentially destructive will happen if you continue to the next step. In the CSV reports, you may see errors on an individual row, without seeing any error on the batch processing step screen. This means that record could not be processed or transferred. You will be able to move forward to the next batch processing step, but individual records with errors on one step will also have an error on the next step. For instance, if a row could not be processed into a valid CollectionSpace record, there is no record to transferred transfer to CollectionSpace, so there will also be an error for that row in the Transferring step. When there are individual-row errors in the CSV report, you will always be shown a warning about those problems on the batch processing step screen. The reasoning behind this slightly confusing handling is: if you are importing 100 rows, and 1 rows row can’t be processed, it’s likely you’d prefer to go ahead and batch transfer the 99 rows that were successfully processed. Then you can just enter the problem record manually. Likewise, if transfer of 2 out of 5000 records fails due to an internet blip, you certainly do not want the entire transfer job to fail and stop with an error. You’ll want to know which record(s) did not transfer so you can enter them manually, or with a new batch import of just 2 records. |

...

The report contains one row per unique not-found term. That is, if you have used a new term for a given vocabulary/authority in 100 different records or fields, it will appear here once.

The report has four columns: term type (i.e. personauthorities), term subtype (i.e. person or ulan_pa), identifier (the “shortID” the mapping process created for the new term), and value (the term value as it was found in your original data)

The intent of this report is two-fold:

If new terms are unexpected, you can use this information and searching search your CollectionSpace instance to investigate whether case differences, punctuation differences, or typos caused your terms not to match. Then you can update your original file with the expected term form. OR…

If you want to create fuller term records, before linking this data to the terms, you can use the values in this form to create new CSVs for separate batch import of term records. To do that:

Filter this report to a given type/subtype combination (i.e. local person names)

Copy the values out into the termDisplayName column of the Person CSV template

Add in any more details you’d like to import

Batch import each of those CSV forms (one batch per term type).

Warnings and errors

The following describe what you may see in the processing_report CSV.

ERR: unparseable_date

The processor cannot convert the given date value into a valid plain (i.e. non-structured) date value.

Records with this error will not be transferred, since you would lose the data in any field(s)

...

After importing your new Terms, you need to delete the batch you started with start over with it. The processing step on the new batch will match to the terms you just imported.

If you proceed with this batch, it will create new stub records with the identifiers generated by the batch processor, which don’t match the identifiers generated by CollectionSpace when you imported the fuller term records. You will end up with duplicate term records!

...

If you do not want to create fuller term records, you can proceed with this batch without importing the terms separately, and the CSV Importer will create “stub” records for the new Terms that only include a Display name.

with this error.

The processing report tells you the affected column and unparseable date value for each record with this error.

The solution to this error is to use a date format that is accepted by the field you are trying to import data into. For example, if you get this error in Object annotationDate field, test that the date value you enter into the CSV is accepted by the application if you manually enter it into the Annotation Date field of a record.

WARN: boolean_value_transform

One or more values in a boolean field in your CSV was something other than true or false. The processor has attempted to convert your 0, 1, y, n, or other value into true or false, but you may want to review that the values you gave it were converted as expected.

The processing report tells you what each value triggering this warning was converted to, so that you may review.

WARN: multiple_records_found_for_term

If you receive this warning, the processing_report with have a column with this as the header value.

For rows where this warning applies, the column is filled in with the following info:

the column the term is used in (the end of this column’s name indicates which exact authority vocabulary was being searched---person local vs. person ulan, for example)

the term for which multiple records were found

how many records were found

the API record URL for the term that was used in the processed record

EXAMPLE: perhaps you have two Place authority terms with the same term display name value “New York”. Other data in the authority records distinguishes that one record is for the state, while the other is for the city. However, if you use “New York” as a value in a place-authority controlled field in a CSV import, it’s impossible to know which “New York” authority record should be linked to by this field.

The CSV Importer uses the first matching authority record it receives to populate the field. If the first record was for the state and you meant the state, then you have no problem. If you meant the city, then you either need to make a note to manually fix this after you transfer the records OR cancel your batch, update one or both “New York” term display name values to distinguish them, update your CSV to use the new display name value, and re-import.

The best way to avoid this warning is to create unique terms within a given authority or vocabulary.

WARN: unparseable_structured_date

The processor could not convert the given date value into a valid structured date.

Records with this warning will be transferred, as no data is lost in this case. The given date value will be imported as the value of the display date (i.e. the value you see in the record before you click in to show the detailed structured date fields.) The Note field in the date details is populated with "date unparseable by batch import processor". However, the detailed structured date fields will not be populated, which can affect searching, sorting, or reporting on these dates.

The processing report tells you the affected column and unparseable date value for each record with this warning.

There are several approaches to dealing with this warning:

If you do not care about whether or not structured date detail fields get populated, or want to defer the issue to a later cleanup project, you can ignore the warning

Fix the data in the CSV and re-import. Move values that are not actually dates to other fields. Use standard date formats where possible. Note that the date processing done by this tool will have somewhat different results than what you get entering structured date values manually (for example: try entering “10-19-22” as Object Production Date manually vs. importing it via the CSV Importer)

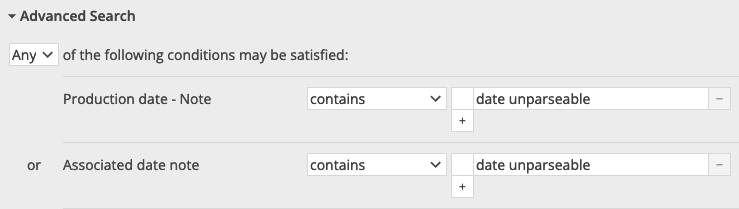

Fix the dates in CollectionSpace after importing. If you received this warning on dates in Object Production Date and Associated Date fields, you could find all affected records in CollectionSpace via this search:

Transferring

WARNING: Some records did not transfer. See CSV report for details

...

Use the CSV report to create a new import file

Delete rows for records that transferred successfully

Delete any INFO, WARNING, or ERROR data columns added by the CSV Importer

Create a new batch and make sure to select appropriate transfer actions on the Transferring step

ERROR: Could not delete because other records in the system are still referencing this authority term

As in the web application, you cannot delete an authority term if it is still being used in other records.

Visit the un-deleted term authority record in CollectionSpace and look in the right sidebar to see what records it is “Used by”. Visit those records and remove the term from them. Then you should be able to delete the term manually or via a new CSV Importer batch.

If your term does not appear to be “Used by” any other records, but you are getting this message, it is likely that records using this term were “soft deleted” at some point. These records no longer show up in the application, but still exist in the underlying data used by the services API. Contact your CollectionSpace admin support and ask them to check for and delete from the database any soft deleted records using the term you wish to delete.

ERROR: No processed data found. Processed record may have expired from cache, in which case you need to start over with the batch.

As described in the Known issues/limitations documentation, processed records are only kept in memory in the CSV Importer for a day.

This indicates there are no longer any records to be transferred to CollectionSpace because too much time has elapsed between processing and attempting to transfer. You will need to start over, reprocess the batch, and transfer the processed records more promptly.

ERROR: [partial URI indicating a record type and ids] DELETE

For an authority term record, this will look like:

ERROR: /conceptauthorities/1824cf1c-958b-4c93-a42b/items/42fe73c3-0f81-4c15-8302-e6144877dbc3 DELETE

For an object, this will look like:

/collectionobjects/850161ed-466c-4af2-bc45 DELETE

If you are getting this error on ALL records you transfer as deletes:

Your CollectionSpace user probably does not have permission to do “hard deletes.” Someone with the TENANT_ADMINISTRATOR Role in your CollectionSpace instance will need to handle batches of deletes for you (or assign you to that Role).

If you are seeing this error on only some delete transfers, while others are successful:

Save a copy of the full CSV report generated by the transfer step and leave the batch in the CSV Importer. If you are a Lyrasis hosted client, or have a Lyrasis Zendesk account, send a copy of that report in a new Zendesk ticket so we can look into the problem. If you are not a Lyrasis hosted client, we are unlikely to have the access to your system that we would need to determine the cause of this issue. However, you are welcome to reach out to the CollectionSpace team in the usual ways and we can check whether there’s anything we can tell you.